University performance-based funding is bound to fail

Tying a significant portion of funding to any specific set of metrics places undue pressure on universities, and impinges on their traditional mission and collegial autonomy.

Performance-based funding for universities – as recently proposed by Alberta’s blue-ribbon panel report and Ontario’s newly restructured strategic mandate agreements – may at first glance appear to be a good idea. It is not, for a variety of reasons.

In both cases, there is a strong call for a significant proportion of performance to be based on narrow labour-market outcomes, commercialization and economic imperatives. For example, the blue-ribbon report calls for “ensuring the required skills for the current and future labour market, expanding research and technology commercialization … [and] achieving broader societal and economic goals.” This announcement comes on the heels of the Ontario government’s surprising May 2019 announcement that, by 2024-25, 60 percent of Ontario universities’ operating funds would be determined by their performance on 10 metrics. This is a drastic departure from the current 1.4 percent of funding based on performance and is a marked divergence from Canadian university funding models in general.

To be certain, the collection of system-wide data is not a bad idea on its own in order for governments and institutions to improve on goals, offerings and the delivery of a robust postsecondary education. However, when it becomes a high-stakes process, it runs the real danger of skewing university programs and perverting the very objectives it sets out to measure through over-emphasis and, frankly, “gaming” of one sort or another. Perhaps an obvious point, but metric selection is not a neutral act, and tying a significant proportion of funding to any specific set of metrics will invariably place undue pressure on universities to favour and conform to that specific set of metrics, thus impinging on their traditional mission and collegial autonomy.

This point is made more clearly by examining a few of Ontario’s proposed metrics. Take “graduate earnings,” for instance: by focusing on earnings, universities are rewarded for favouring high-paying fields, rather than for developing graduates who are critical, creative and engaged citizens capable of meaningful work (and lives) in a wide variety of areas. It gets right at the heart of the age-old question: What is the purpose of a university experience?

Let’s examine “skills and competencies” next: these in all likelihood will be measured through tests similar to the standardized tests of numeracy, literacy and critical thinking recently piloted by the Higher Education Quality Council of Ontario as part of their Essential Adult Skills Initiative. If so, one need look no further than the mass high-stakes testing craze that has all but strangled sound pedagogy in so many public education districts within the United States and beyond for clues to what could go wrong by expanding standardized testing out of K-12 and into the postsecondary sector.

Moreover, the measures themselves are grossly inadequate. A few 45-to-90-minute, one-shot standardized tests promoted and administered by HEQCO could never capture nor compare to any degree program’s existing course and program requirements – each determined and assessed by expert professionals and subject matter specialists. A standard four-year undergraduate experience likely includes 20 to 40 expert “second opinions” diagnosed by a wide variety of professors with a diversity of knowledge, teaching styles and assessment strategies. Privileging one set of computerized standardized tests as a proxy by which to judge a program’s worth is not only misleading, it further erodes academic professionalism and the freedom to teach and assess students as deemed appropriate.

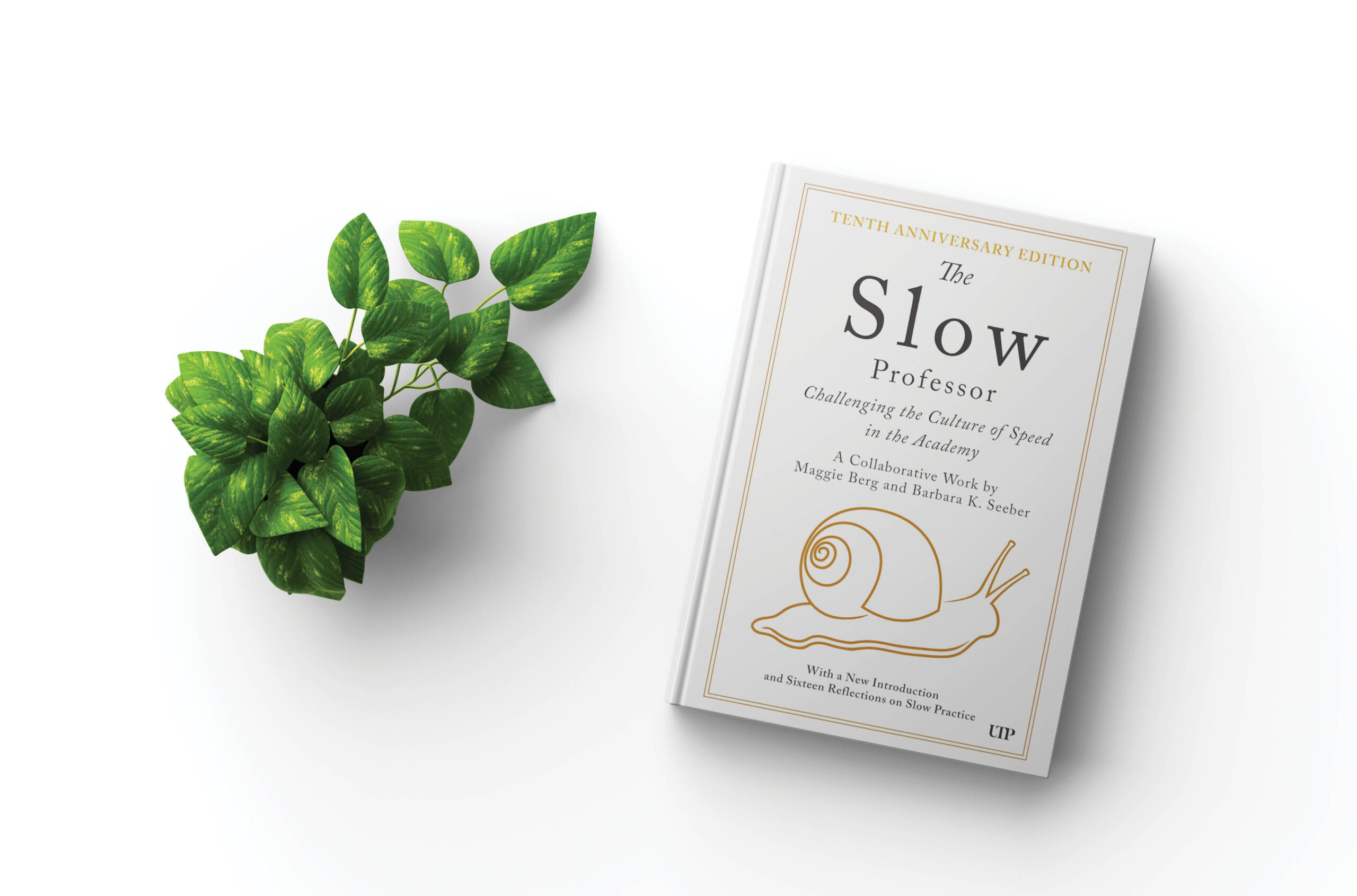

Continuing with this examination, a cursory review of the “research capacity” and “innovation” metrics reveals the inherent bias in equating research capacity and innovation with the simple calculus of total funds received (both from industry and the tri-council). In doing so, the chosen indicators necessarily privilege the types of research that fit into established funding envelope goals and traditional output formats, while devaluing non-traditional scholarship – for example, community-engaged, participatory and Indigenous research approaches. Overlooked altogether is potentially ground-breaking scholarship that requires little or no funding at all (other than perhaps a well-resourced library), or whose funding may be sourced from community-based, non-governmental or even other governmental agencies.

However they are operationalized, performance-based funding models lead to a narrowing of scholarship, of what is possible, both in teaching and research, and inevitably harm society by robbing it of opportunities for risky, yet innovative breakthroughs, as well as valuable areas of research and training that cannot easily be measured by a simple financial calculus. In short, we start to focus on what counts and what is rewarded over what matters, such as a well-rounded citizenry and the diverse array of consequential scholarship, action and critical public engagement our world so desperately needs.

Marc Spooner is a professor in the faculty of education at the University of Regina.

Featured Jobs

- Geography - Assistant Professor (Indigenous Geographies)University of Victoria

- Law - Assistant or Associate Professor (International Economic Law)Queen's University

- Architecture - Assistant Professor (environmental humanities and design)McGill University

- Sociology - Professor (Quantitative Data Analysis Methods and Social Statistics)Université Laval

- Engineering - Assistant or Associate Professor (Robotics & AI)University of Alberta

Post a comment

University Affairs moderates all comments according to the following guidelines. If approved, comments generally appear within one business day. We may republish particularly insightful remarks in our print edition or elsewhere.

4 Comments

This is a necessary assessment of the wisdom, or lack thereof, in implementing “performance”-based assessment of universities, but it is only a start. To elaborate on two points, first, whenever a metric is co-opted for use in evaluation, especially high-stakes evaluation, it is immediately “gamed,” as Professor Spooner alludes to. Citation metrics are a great example. Initially a neutral measure of how many times an article is cited, they are now routinely manipulated because so many jurisdictions use them in hiring and promotion decisions. Even GDP, originally designed to measure war-making capacity in the US in WWII, distorts and perverts economic investments now that it is used as a goal.

Second, university evaluation is irreducibly complex, and the messy system we have now of faculty evaluating one another may be the best we can do. A nice example comes from the Economist, which in 2015 rated US universities on how well they _improved_ students’ earning potential (https://en.wikipedia.org/wiki/The_Economist_Magazine%27s_List_of_America%27s_Best_Colleges). The top universities are tiny places you have probably never heard of that take average students and get them into good careers. My alma mater, a small liberal arts college that caters to high performers, actually got a _negative_ score, as it takes kids who could earn huge salaries and convinces a decent proportion of them to forego large salaries in favour of helping the world, eg by working for NGOs. Has it failed its students – or the wider world – by promoting selflessness instead of greed? I don’t think so.

The academic community needs to educate the public and policymakers about the dangers of this misguided plan.

Furthermore, the focus on graduate earnings is misleading because it focusses on earnings in the first few years after graduation rather than on life-long earnings. In the few years after graduation, STEM majors are likely to earn more than Humanities and Social Sciences majors, but “By age 40 the earnings of people who majored in fields like social science or history have caught up. . . A liberal arts education fosters valuable “soft skills” like problem-solving, critical thinking and adaptability. Such skills are hard to quantify, and they don’t create clean pathways to high-paying first jobs. But they have long-run value in a wide variety of careers. . . . High-paying jobs in management, business and law raise the average salary of all social science/history majors.”

https://it.slashdot.org/story/19/09/29/1951233/liberal-arts-majors-eventually-earn-more-than-stem-majors

Professor Spooner’s observations that performance-based funding is biased towards a narrow definition of research and education that privileges economic utility is, regrettably, just highlighting the intended outcome for many right-of-centre politicians and lawmakers in the US and Canada. The optics of accountability matter more than the exponential costs of reporting and calculation. Ongoing budget uncertainty is in many ways intended to keep universities on the defensive.

The more compelling objection, though, to governments eager to implement performance-based funding, is his observation that these simplistic, high-stakes KPIs will in fact constrain innovation and entrepreneurship in the higher ed sector, when ostensibly the intent is to encourage it.

The unintended consequence of high-stakes KPIs is to reinforce the status quo. Under the proposed Ontario funding model, institutions will be punished for failing to maintain their own three-year average performance on a set of ten metrics. No matter how remarkable the breakthrough, or how it contributes to the prosperity of the province or the country, no institution will be rewarded for outperforming in any way.

Higher ed needs a funding model that incentivizes innovation, excellence and strategic focus.

“tying a significant proportion of funding to any specific set of metrics will invariably place undue pressure on universities to favour and conform to that specific set of metrics” – exactly! All you have to do is look at how the Research Excellence Framework, Teaching Excellence Framework and all the nonsensical league tables in the UK have crushed university staff under a mountain of paperwork and provided a completely dispiriting set of sticks to beat them with. All these measures are introduced without any real discussion about what, exactly, is the “problem” they are trying to solve. They are political expedients used to squeeze budgets and only give money to those institutions which cream off the top students and spit them out the other end into jobs based on nepotism and privilege. The universities which are doing an exemplary job at recruiting non-traditional students or helping students prepare for vital work in advocacy, justice, social support, arts, etc – which may or may not be economically lucrative but can truly make a different to people’s lives – are made to survive on less and less. The university where I have worked for over 15 years receives 12% of its funding from government now, but still has to jump through all the absurd hoops just to get that. I honestly believe that their hidden agenda is to drive the majority of the universities into the ground, have many fail, and then withdraw funding and have higher education go completely private (except, of course, for Oxbridge!).